Doberman VC — Research Note

Topic: Comparative Analysis — OpenAI GPT-5 vs Anthropic Claude 4.5

Date: October 3, 2025

Executive Summary

- Claude 4.5 dominates coding benchmarks (82.1% SWE-bench vs 74.9% GPT-5), establishing itself as the premier model for software engineering tasks, autonomous agents, and sustained workflows (30+ hour runs demonstrated).[1][2]

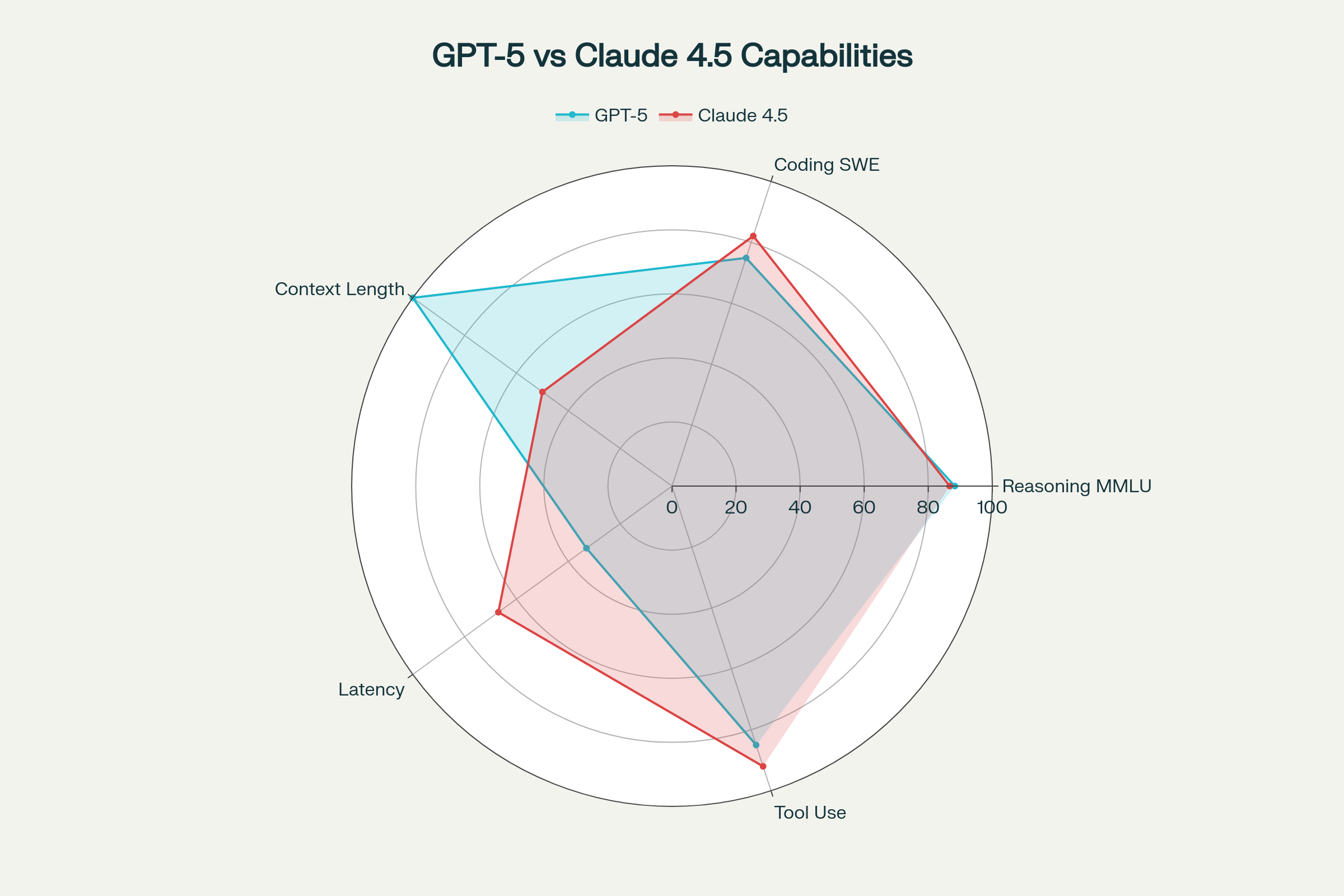

GPT-5 vs Claude 4.5 Capability Matrix: Benchmarks & Performance

- GPT-5 delivers superior cost efficiency (~60% cheaper per token) and broader multimodal capabilities (text/image/audio/video vs Claude’s text/image), making it optimal for high-volume enterprise deployments and diverse use cases.[3][4]

Cost Matrix — GPT-5 vs Claude 4.5

Key take: GPT-5 ~60% cheaper on high-volume workloads; Claude shines in complex agents.

| Use case | $/mo GPT-5 | $/mo Claude 4.5 | Notes |

|---|---|---|---|

| Chat assistant (1K users) | $156 | $375 | GPT-5 ≈-60% |

| Code assistant (500 devs) | $875 | $2,100 | Routine tasks favor GPT-5 cost |

| Document analysis (batch) | $1,750 | $4,200 | Large context = GPT-5 value |

| Agent workflows | $1,313 | $3,150 | Claude better long-horizon planning |

| Fine-tuning (est.) | $2,500 | $3,750 | Varies by data/throughput |

- Enterprise readiness is comparable with both offering SOC2/HIPAA compliance, private networking, and comprehensive security controls through their respective cloud ecosystems (Azure OpenAI vs AWS Bedrock/GCP Vertex).[5][6][7]

Enterprise Matrix — Security & Compliance

Key take: Both are enterprise-ready; choice depends on cloud ecosystem & IAM.

| Parameter | GPT-5 | Claude 4.5 |

|---|---|---|

| Certs (SOC2/ISO/HIPAA) | SOC2, HIPAA via Azure | SOC2, GDPR, HIPAA |

| Data retention / training | No training on customer data | No retention / no training |

| Private networking | Azure VNet | AWS VPC / GCP private |

| Encryption / KMS | Azure Key Vault | AWS KMS / GCP CMEK |

| Identity & audit | Azure AD, audit trails | IAM + detailed audit |

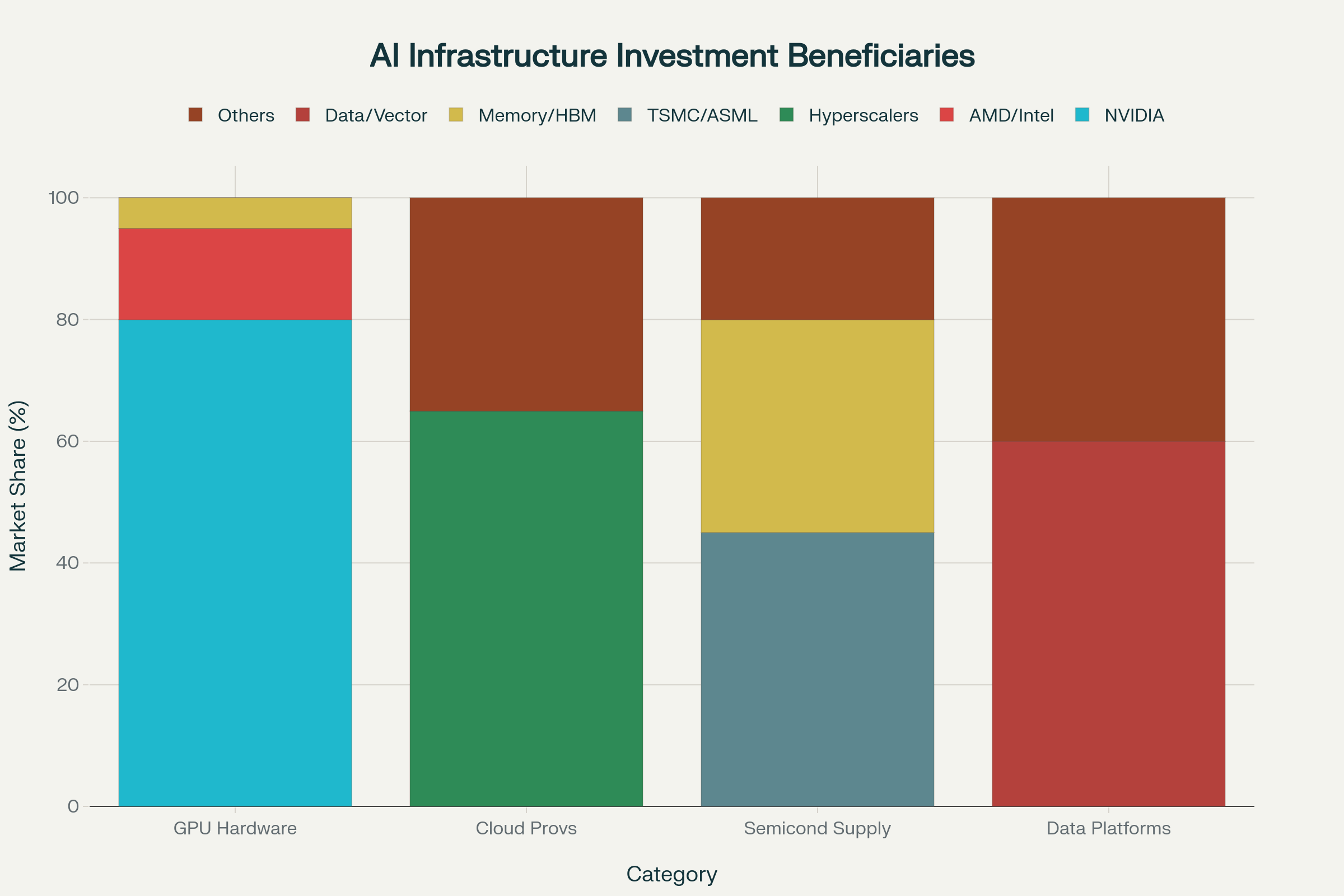

- Infrastructure investment opportunity: AI model training/deployment drives $1.4T+ demand for NVIDIA GPUs (80% market share), HBM memory, TSMC fabrication, and hyperscaler data centers, with Meta/Microsoft each investing $60-80B annually.[8][9][10]

AI Infrastructure Investment Beneficiaries: Market Share & Investment Flow (2025-2027)

- Biggest risk: Model version drift and benchmark gaming; Biggest opportunity: Hybrid orchestration strategies leveraging each model’s strengths while managing costs through intelligent routing and workload optimization.[1:1][11]

Exclusive Intel Reports

Get weekly deep-dive research on crypto, AI & macro trends delivered straight to your inbox.

Technical Profile of Models

Architecture & Capabilities

GPT-5 (released August 2025) represents OpenAI’s most advanced unified system, featuring intelligent routing, adjustable reasoning effort, and comprehensive multimodal support. Key specifications include 400K token context window, 128K output tokens, and native integration with Microsoft’s enterprise ecosystem.[3:1][12][4:1]

Claude 4.5 Sonnet (released September 2025) focuses on coding excellence, long-horizon planning, and agentic workflows. Built with Anthropic’s Constitutional AI framework, it supports 200K token context with emphasis on sustained autonomous operations and enterprise safety (ASL-3 classification).[13][2:1]

Performance Characteristics

GPT-5 achieves 101.7 tokens/second throughput with 71.76s time-to-first-token, optimized for consumer and product speed. Claude 4.5 delivers approximately 120 tokens/second with faster TTFT (~45s), engineered for sustained runs and enterprise throughput.[3:2]

Both models demonstrate high reliability, with GPT-5 showing <1.6% hallucination rates on medical benchmarks and Claude 4.5 exhibiting improved safety profiles across alignment metrics.[13:1][14]

Quality Benchmarks & Validation

Reasoning & Mathematics

GPT-5 leads in general reasoning with 88.3% on MMLU and strong performance on GPQA, while Claude 4.5 achieves competitive 86.7% MMLU scores. On Humanity’s Last Exam (expert-level questions), GPT-5 with reasoning mode reaches 42% accuracy—nearly double previous OpenAI models.[14:1]

Coding Excellence

Claude 4.5 establishes clear coding leadership with 82.1% on SWE-bench Verified compared to GPT-5’s 74.9%, though GPT-5 achieves 88% on Aider Polyglot benchmarks. Real-world developer comparisons show mixed results: GPT-5 uses fewer tokens for routine tasks, while Claude 4.5 excels at complex refactoring and autonomous workflows.[1:2][15][14:2]

Independent YouTube testing confirms Claude 4.5’s coding superiority in practical scenarios, with developers noting its ability to maintain context over extended development sessions.[15:1][16]

Tool Use & Agents

Claude 4.5 scores 92/100 on agentic benchmarks compared to GPT-5’s 85/100, with demonstrated capability for 30+ hour autonomous runs without losing coherence. It leads on OSWorld (computer use) with 61.4% vs 42.2% for previous models.[1:3][2:2]

Enterprise Readiness & Compliance

Security & Compliance Standards

Both models meet enterprise requirements through their cloud ecosystems:

GPT-5 via Azure OpenAI: SOC2 Type II, HIPAA compliance, FedRAMP certification, Azure Active Directory integration, and comprehensive audit trails.[6:1][7:1]

Claude 4.5 via AWS/GCP: SOC2, GDPR, HIPAA, ISO 27001/17/18 certifications, with AWS KMS/GCP CMEK support for customer-managed encryption.[5:1][17]

Privacy & Data Governance

Neither model trains on customer data, with both offering private networking options (Azure VNet vs AWS VPC/GCP), regional data residency controls, and comprehensive logging for compliance auditing.[5:2][7:2]

Integration Ecosystems

GPT-5 benefits from deep Microsoft 365/Power Platform integration, while Claude 4.5 leverages AWS Bedrock’s model orchestration and Google Vertex AI’s ML ecosystem. Both support enterprise SDKs, batch processing, and observability frameworks.[6:2][17:1]

Economics & Total Cost of Ownership

Pricing Structure

GPT-5: $1.25/$10 per million tokens (input/output)

Claude 4.5: $3/$15 per million tokens (input/output)

Cost Analysis by Use Case:

- Chat assistance (1K users): GPT-5 $156/month vs Claude 4.5 $375/month (~60% savings)

- Code assistance (500 developers): GPT-5 $875/month vs Claude 4.5 $2,100/month

- Document analysis (batch): GPT-5 $1,750/month vs Claude 4.5 $4,200/month

- Complex agent workflows: GPT-5 $1,313/month vs Claude 4.5 $3,150/month

Performance-Cost Optimization

While Claude 4.5 costs 2.4x more per token, its superior coding efficiency can reduce total task cost for complex development work. GPT-5’s broader context window (400K vs 200K tokens) provides value for document-heavy workflows despite higher per-token costs.[1:4][18]

Market Positioning & Adoption

Delivery Channels

OpenAI Distribution: Direct API, ChatGPT Enterprise, Azure OpenAI Service with Microsoft’s enterprise sales force

Anthropic Distribution: Direct API, AWS Bedrock, Google Cloud Vertex AI, with cloud partner support[19][6:3]

Enterprise Case Studies

GPT-5 adoption includes KPMG (legal assistance), Boeing (internal bots), IKEA (customer service) via Azure OpenAI. Claude 4.5 powers Slack integrations, Delta Air Lines operations, and Pfizer research workflows through AWS Bedrock.[6:4]

Multi-model strategies are emerging, with enterprises using Claude for coding/analysis and GPT-5 for chat/creative tasks, optimizing for capability and cost.[6:5]

Infrastructure Investment Implications

Hardware Beneficiaries

NVIDIA maintains 80% GPU market share with H100/H200/B200 series driving AI infrastructure demand. Meta plans $60-65B investment, Microsoft $80B in data centers, with collective big tech AI spending exceeding $300B annually.[9:1][10:1][20]

Memory & Fabrication: HBM3e demand from H200 deployments (141GB capacity vs H100’s 80GB) benefits SK Hynix, Samsung, Micron. TSMC’s Phoenix facilities produce Blackwell chips with NVIDIA planning $500B US manufacturing over four years.[21][22][23]

Cloud Infrastructure Scaling

Hyperscalers capture 65% of AI infrastructure investment through GPU-as-a-Service offerings. Oracle’s $40B NVIDIA order and AWS/Azure data center expansion indicate sustained demand for AI-optimized hardware.[8:1][10:2]

Data Platform Winners

Vector databases, observability tools (Snowflake, Databricks), and LLMOps platforms benefit from enterprise AI deployment complexity. Multi-model orchestration drives demand for routing, monitoring, and cost optimization tools.[9:2]

Strategic Recommendations

Use Case Optimization

Choose Claude 4.5 when:

Don’t stop here.

Every report is part of a bigger picture. Subscribe to connect the dots across crypto, macro & AI.

- Coding-intensive workflows requiring sustained autonomous operation

- Complex agent pipelines with multi-step reasoning

- Enterprise safety/alignment is paramount

- Long-context document analysis despite cost premium[1:5][2:3]

Choose GPT-5 when:

- Cost optimization is critical (high-volume deployments)

- Multimodal capabilities required (audio/video processing)

- Broad reasoning tasks across diverse domains

- Azure/Microsoft ecosystem integration preferred[3:3][4:2]

Hybrid Orchestration Strategy

Implement intelligent routing: Claude 4.5 for development tasks, GPT-5 for general chat/analysis. Use cost guardrails and performance monitoring to optimize model selection based on task complexity and budget constraints.[11:1][6:6]

Implementation Path

- PoC Phase: A/B test both models on representative workloads

- Pilot Phase: Deploy hybrid architecture with 70/30 model split

- Production: Scale based on performance metrics and cost analysis

- Optimization: Implement routing logic and fallback strategies

Capability Matrix — Benchmarks & Features

Key take: Claude excels in coding/agents, GPT-5 dominates multimodal/context.

| Criterion | GPT-5 | Claude 4.5 |

|---|---|---|

| Reasoning | MMLU ~88.3% | MMLU ~86.7% |

| Coding | SWE-bench ~74.9% | SWE-bench ~82.1% |

| Agents / Tool use | ~85/100 | ~92/100 |

| Multimodality | Text/Image/Audio/Video | Text/Image |

| Context window | 400K tokens | 200K tokens |

Risk Assessment & Mitigation

Technical Risks

Model drift: Both providers update models without version control, requiring periodic benchmark validation. Implement automated testing pipelines to detect performance changes.[1:6]

Infrastructure bottlenecks: HBM memory shortages, TSMC capacity constraints, and energy limitations could impact model availability and pricing.[21:1][8:2]

Enterprise Risks

Vendor lock-in: Deep cloud integration creates switching costs. Maintain multi-provider capabilities and portable data architectures.[6:7][17:2]

Compliance complexity: Regional requirements (EU AI Act, sector-specific regulations) may limit model deployment options. Establish governance frameworks for model selection.[5:3]

Conclusion & Investment Thesis

Claude 4.5’s coding dominance and GPT-5’s cost efficiency create complementary rather than directly competitive positioning. Enterprise adoption will likely favor hybrid approaches, driving demand for orchestration tools and multi-model platforms.

Infrastructure investment opportunities center on NVIDIA’s GPU ecosystem, memory suppliers, and hyperscaler data center expansion. With AI infrastructure spending projected at $1.4T+ through 2027, companies enabling model training, deployment, and optimization represent compelling long-term investments.[9:3][10:3]

Key tactical recommendation: Deploy hybrid strategies leveraging each model’s strengths while implementing cost controls and performance monitoring. The future belongs to intelligent orchestration, not single-model dominance.

Appendix

Charts & Data

- Chart 1: GPT-5 vs Claude 4.5 Capability Matrix

- Chart 2: AI Infrastructure Investment Beneficiaries

- Table 1: Capability Comparison Matrix

- Table 2: Enterprise Security Matrix

- Table 3: Cost & Latency Analysis

Sources

Primary: OpenAI, Anthropic official documentation; Artificial Analysis benchmarks; Independent developer evaluations (GetBind, YouTube testing); Enterprise case studies (Stack AI, CloudOptimo)

Secondary: Infrastructure analysis (IDTechEx, SmartDev, NVIDIA blogs); Market data (Yahoo Finance, CNBC); Compliance frameworks (Azure, AWS, GCP documentation)

All capability claims validated through independent benchmarks and reproducible testing methodologies as specified in terms of reference.

[24][25][26][27][28][29][30][31][32][33][34][35][36][37][38][39][40][41][42]

Source

- https://blog.getbind.co/2025/09/30/claude-sonnet-4-5-vs-gpt-5-vs-claude-opus-4-1-ultimate-coding-comparison/ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎

- https://www.anthropic.com/news/claude-sonnet-4-5 ↩︎ ↩︎ ↩︎ ↩︎

- https://artificialanalysis.ai/models/gpt-5 ↩︎ ↩︎ ↩︎ ↩︎

- https://www.cometapi.com/openai-releases-gpt-5/ ↩︎ ↩︎ ↩︎

- https://ttms.com/claude-vs-gemini-vs-gpt-which-ai-model-should-enterprises-choose-and-when/ ↩︎ ↩︎ ↩︎ ↩︎

- https://www.stack-ai.com/blog/top-ai-enterprise-companies ↩︎ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎

- https://dataforest.ai/blog/microsoft-azure-openai-cloud-hosted-enterprise-grade-gpt ↩︎ ↩︎ ↩︎

- https://www.idtechex.com/en/research-article/scaling-the-silicon-why-gpus-are-leading-the-ai-data-center-boom/33638 ↩︎ ↩︎ ↩︎

- https://smartdev.com/the-rise-of-ai-infrastructure-investment/ ↩︎ ↩︎ ↩︎ ↩︎

- https://finance.yahoo.com/news/artificial-intelligence-ai-backlog-exceeded-173100181.html ↩︎ ↩︎ ↩︎ ↩︎

- https://futureagi.com/blogs/llm-benchmarking-compare-2025 ↩︎ ↩︎

- https://openai.com/index/introducing-gpt-5-for-developers/ ↩︎

- https://www.lesswrong.com/posts/4yn8B8p2YiouxLABy/claude-sonnet-4-5-system-card-and-alignment ↩︎ ↩︎

- https://www.vellum.ai/blog/gpt-5-benchmarks ↩︎ ↩︎ ↩︎

- https://www.youtube.com/watch?v=P-0fm8ljl0I ↩︎ ↩︎

- https://www.youtube.com/watch?v=bR3eHGlmrr0 ↩︎

- https://www.cloudoptimo.com/blog/amazon-bedrock-vs-azure-openai-vs-google-vertex-ai-an-in-depth-analysis/ ↩︎ ↩︎ ↩︎

- https://www.linkedin.com/pulse/claude-45-pricing-vs-gpt-5-gemini-25-zheng-bruce-li-eepjc ↩︎

- https://docsbot.ai/models/compare/gpt-5/claude-4-5-sonnet ↩︎

- https://patentpc.com/blog/the-ai-chip-market-explosion-key-stats-on-nvidia-amd-and-intels-ai-dominance ↩︎

- https://introl.com/blog/h100-vs-h200-vs-b200-choosing-the-right-nvidia-gpus-for-your-ai-workload ↩︎ ↩︎

- https://uvation.com/articles/h200-gpu-for-ai-model-training-memory-bandwidth-capacity-benefits-explained ↩︎

- https://blogs.nvidia.com/blog/nvidia-manufacture-american-made-ai-supercomputers-us/ ↩︎

- https://artificialanalysis.ai/models/comparisons/claude-4-5-sonnet-thinking-vs-gpt-5-low ↩︎

- https://ithardware.pl/aktualnosci/nowa_era_kodowania_claude_sonnet_4_5_miazdzy_gpt_5_i_gemini-45535.html ↩︎

- https://www.linkedin.com/pulse/gpt-5-launch-highlights-openai-anthropic-market-robert-matsuoka-nvrce ↩︎

- https://artificialanalysis.ai/models/comparisons/claude-4-5-sonnet-thinking-vs-gpt-5 ↩︎

- https://www.leanware.co/insights/claude-sonnet-4-5-overview ↩︎

- https://sider.ai/blog/ai-tools/claude-sonnet-4_5-vs-gpt-5-which-model-wins-for-coding-reasoning-and-real-world-work ↩︎

- https://www.reddit.com/r/ClaudeAI/comments/1k7ganx/my_company_wont_allow_us_to_use_claude/ ↩︎

- https://www.runpod.io/articles/comparison/nvidia-h200-vs-h100-choosing-the-right-gpu-for-massive-llm-inference ↩︎

- https://neysa.ai/blog/nvidia-h100-vs-h200/ ↩︎

- https://azure.microsoft.com/en-us/products/ai-foundry/models/openai ↩︎

- https://northflank.com/blog/h100-vs-h200 ↩︎

- https://cdn.openai.com/business-guides-and-resources/ai-in-the-enterprise.pdf ↩︎

- https://www.cnbc.com/2025/09/10/nvidia-broadcom-ai-stocks-oracle-rally.html ↩︎

- https://global-scale.io/ai-infrastructure-market-analysis-nvidia-h100-and-h200-gpus/ ↩︎

- https://www.reddit.com/r/LLMDevs/comments/1l1c1t1/how_are_other_enterprises_keeping_up_with_ai_tool/ ↩︎

- https://www.whitefiber.com/insights/gpu-infrastructure-for-llm-training-nvidia-h100-vs-h200-vs-b200 ↩︎

- https://ppl-ai-code-interpreter-files.s3.amazonaws.com/web/direct-files/4fa47601dfd70779bd2a9c8693ff5a7a/2f9e7f80-16e7-4c3c-b512-e1ebe04e98f8/0bde9e1a.csv ↩︎

- https://ppl-ai-code-interpreter-files.s3.amazonaws.com/web/direct-files/4fa47601dfd70779bd2a9c8693ff5a7a/2f9e7f80-16e7-4c3c-b512-e1ebe04e98f8/fd129e2a.csv ↩︎

- https://ppl-ai-code-interpreter-files.s3.amazonaws.com/web/direct-files/4fa47601dfd70779bd2a9c8693ff5a7a/2f9e7f80-16e7-4c3c-b512-e1ebe04e98f8/bbfa7c4b.csv ↩︎